😄2.集群环境搭建

集群环境搭建

环境规划

集群类型

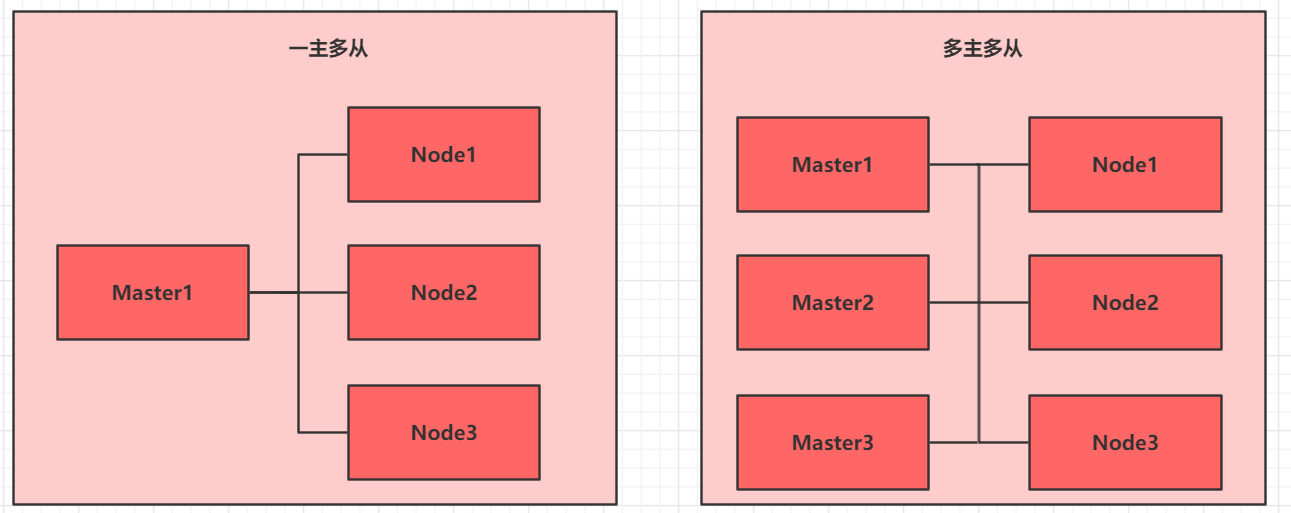

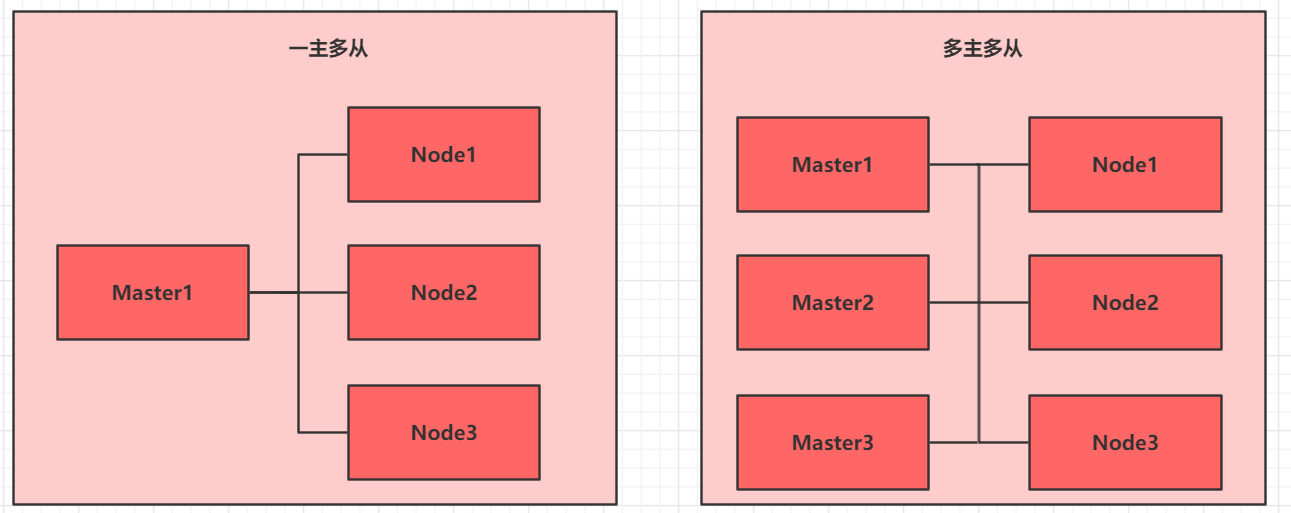

kubernetes集群大体上分为两类:一主多从和多主多从

- 一主多从:一台master,多台node,搭建简单,但是master出现错误,整个集群不再可用

- 多主多从:多台master,多台node,搭建麻烦,但是容错率高

为了简单,本文中使用一主两从

安装方式

目前生产部署Kubernetes 集群主要有两种方式:

1.kubeadm(本文中采用)

Kubeadm 是一个K8s 部署工具,提供kubeadm init 和kubeadm join,用于快速部署Kubernetes 集群。

官方地址:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm/

2.二进制包

从github 下载发行版的二进制包,手动部署每个组件,组成Kubernetes 集群。

Kubeadm 降低部署门槛,但屏蔽了很多细节,遇到问题很难排查。如果想更容易可控,推荐使用二进制包部署Kubernetes 集群,虽然手动部署麻烦点,期间可以学习很多工作原理,利于后期维护。

kubeadm 部署

kubeadm 是官方社区推出的一个用于快速部署kubernetes 集群的工具,这个工具能通过两条指令完成一个kubernetes 集群的部署:

- 创建一个Master 节点kubeadm init

- 将Node 节点加入到当前集群中$ kubeadm join <Master 节点的IP 和端口>

安装要求

在开始之前,部署Kubernetes 集群机器需要满足以下几个条件:

- 一台或多台机器,操作系统CentOS7.x-86_x64

- 硬件配置:2GB 或更多RAM,2 个CPU 或更多CPU,硬盘30GB 或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像

- 禁止swap 分区

最终目标

- 在所有节点上安装Docker 和kubeadm

- 部署Kubernetes Master

- 部署容器网络插件

- 部署Kubernetes Node,将节点加入Kubernetes 集群中

- 部署Dashboard Web 页面,可视化查看Kubernetes 资源

准备环境

| 角色 | IP地址 | 组件 |

|---|

| k8s-master01 | 192.168.5.3 | docker,kubectl,kubeadm,kubelet |

| k8s-node01 | 192.168.5.4 | docker,kubectl,kubeadm,kubelet |

| k8s-node02 | 192.168.5.5 | docker,kubectl,kubeadm,kubelet |

系统初始化

分为以下十个步骤

1.设置系统主机名以及 Host 文件的相互解析

1

2

3

4

| #设置主机名

hostnamectl set-hostname k8s-master01 && bash

hostnamectl set-hostname k8s-node01 && bash

hostnamectl set-hostname k8s-node02 && bash

|

1

2

3

4

5

6

| #修改hosts文件

cat <<EOF>> /etc/hosts

192.168.5.3 k8s-master01

192.168.5.4 k8s-node01

192.168.5.5 k8s-node02

EOF

|

1

2

3

| #将hosts文件给其他两个主机复制一份传过去

scp /etc/hosts root@192.168.5.4:/etc/hosts

scp /etc/hosts root@192.168.5.5:/etc/hosts

|

2.安装依赖文件(所有节点都要操作)

1

| yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

|

3.设置防火墙为 Iptables 并设置空规则(所有节点都要操作)

1

2

3

| systemctl stop firewalld && systemctl disable firewalld

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

|

4.关闭 SELINUX(所有节点都要操作)

1

2

3

| swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

|

5.调整内核参数,对于 K8S(所有节点都要操作)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| modprobe br_netfilter

cat <<EOF> kubernetes.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.overcommit_memory=1 # 不检查物理内存是否够用

vm.panic_on_oom=0 # 开启 OOM

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

|

6.调整系统时区(所有节点都要操作)

1

2

3

4

5

6

7

| # 设置系统时区为 中国/上海

timedatectl set-timezone Asia/Shanghai

# 将当前的 UTC 时间写入硬件时钟

timedatectl set-local-rtc 0

# 重启依赖于系统时间的服务

systemctl restart rsyslog

systemctl restart crond

|

7.设置 rsyslogd 和 systemd journald(所有节点都要操作)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| # 持久化保存日志的目录

mkdir /var/log/journal

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF

[Journal]

# 持久化保存到磁盘

Storage=persistent

# 压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

# 最大占用空间 10G

SystemMaxUse=10G

# 单日志文件最大 200M

SystemMaxFileSize=200M

# 日志保存时间 2 周

MaxRetentionSec=2week

# 不将日志转发到 syslog

ForwardToSyslog=no

EOF

systemctl restart systemd-journald

|

8.kube-proxy开启ipvs的前置条件(所有节点都要操作)

1

2

3

4

5

6

7

8

9

10

| cat <<EOF> /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

|

9.安装 Docker 软件(所有节点都要操作)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce

## 创建 /etc/docker 目录

mkdir /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

}

}

EOF

mkdir -p /etc/systemd/system/docker.service.d

# 重启docker服务,设置开机自启

systemctl daemon-reload && systemctl restart docker && systemctl enable docker

|

上传文件到/etc/yum.repos.d/目录下,也可以 代替 yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo 命令

docker-ce.repo

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

| [docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-debuginfo]

name=Docker CE Stable - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-source]

name=Docker CE Stable - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test]

name=Docker CE Test - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-debuginfo]

name=Docker CE Test - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-source]

name=Docker CE Test - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly]

name=Docker CE Nightly - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-debuginfo]

name=Docker CE Nightly - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-source]

name=Docker CE Nightly - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

|

10.安装 Kubeadm (所有节点都要操作)

1

2

3

4

5

6

7

8

9

10

11

12

13

| #切换镜像源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#安装kubelet,kubeadm,kubectl

yum install -y kubelet kubeadm kubectl && systemctl enable kubelet

|

部署Kubernetes Master

分为以下三个步骤

1.初始化主节点(主节点操作)

1

2

3

4

5

6

7

8

9

10

11

12

| kubeadm init \

--apiserver-advertise-address=192.168.5.3 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.21.1 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

|

2.加入主节点以及其余工作节点

1

2

3

4

| #将工作节点加入集群

kubeadm join 192.168.5.3:6443 --token h0uelc.l46qp29nxscke7f7 \

#下面的代码是master自动生成的,每个master都不一样

--discovery-token-ca-cert-hash sha256:abc807778e24bff73362ceeb783cc7f6feec96f20b4fd707c3f8e8312294e28f

|

3.部署网络(master节点执行)

1

| kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

|

下边是上面所说的yml文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

| ---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay-mirror.qiniu.com/coreos/flannel:v0.14.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.14.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=en02

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

|

测试kubernetes 集群

部署nginx 测试

1

2

3

4

5

6

7

| #运行nginx程序

kubectl create deployment nginx --image=nginx

#暴露端口

kubectl expose deployment nginx --port=80 --type=NodePort

#查看服务的状态

kubectl get pod

kubectl get svc

|

来源

视频:

黑马